VMware's Universal Broker allows Horizon users access to multiple Pods through a single URL, routing sessions based on resource availability, entitlements and shortest network paths. Traditionally, multi-site Horizon deployments require a combination of Cloud Pod Architecture (CPA) and 3rd party global load balancers to provide fluid failover and fall back. Universal Broker, part of the Horizon Control Plane, replaces these requirements with a purpose built SaaS based offering. The solution leverages the control plane's privileged insight into Horizon environments to deliver more efficient and error free placement of Horizon sessions across Pods. This addresses shortcomings of traditional CPA/GSLB deployments while laying the ground work for integrating Horizon deployments across various cloud vendors.

The Innovation Of Universal Broker For Horizon

Along with making it easier to deploy and support the cloudiness of Universal Broker is key to more efficient routing and placement of Horizon sessions across Pods. As part the Horizon Control Plane it has privileged information about home sites, resource availability, and established sessions, affording its global load balancing functionality a greater degree of integration with Horizon. Traditionally 3rd party GSLBs and CPA at best can be well coordinated with Horizon, but nothing near the synchronization achieved through Universal Broker. A further departure from the traditional model is how Universal Broker bifurcates Horizon protocol traffic into two separate network paths. The primary Horizon protocol traffic, which handles authentication, travels between the client endpoint device and Universal Broker in the cloud. The secondary Horizon protocol traffic, the display protocol, traverses a second path between the client and actual Horizon environment.

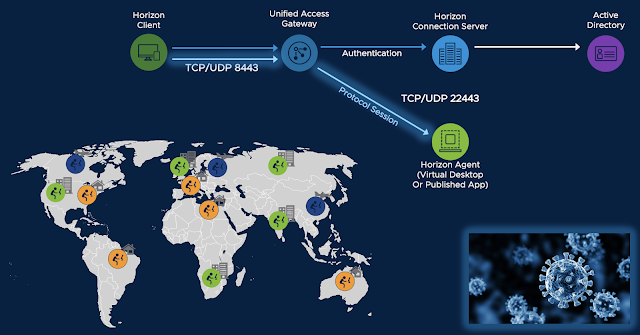

For context, below is a representation of the flow of Horizon protocol traffic for a normal remote Horizon connection. Typically the primary protocol traffic is over TCP port 443 from the endpoint client through the Unified Access Gateway (UAG) appliance onto the Connection server. If this authentication is successful the Horizon secondary protocol kicks into gear, establishing a display protocol connection between the endpoint client and the virtual desktop. Under normal circumstances, using the Blast display protocol, this would consist of 8443 UDP based traffic to the UAG appliance and 22443 UDP traffic from the UAG appliance to the virtual desktop.

With Universal Broker the Horizon protocol is bifurcated, traveling across two separate network paths. The primary Horizon protocol consists of a connection directly against Universal Broker for authentication over TCP 443. After successful authentication the secondary Horizon protocol traverses a connection between the endpoint device to the Horizon Pod itself. Again, under normal circumstances for Blast it would be UDP 8443 to the UAG appliance and UDP 22443 from the UAG appliance to the virtual desktop. Overall, we're talking about two very separate network paths. One from the endpoint device to the Universal Broker service in the Control Plane and a second path from the endpoint device to the Horizon environment itself.

So, when Universal Broker is in the mix the entire primary Horizon protocol connection is shifted and offloaded to the cloud. Though, relatively speaking the vast majority of Horizon protocol traffic is secondary protocol traffic, this shift of the primary protocol traffic is still significant, simplifying support of initial connectivity. If a user fails to authenticate to their Horizon environment through Universal Broker you don't need to investigate site specific challenges providing external world access to Horizon services. No load balancers to troubleshoot, no questions about client network connectivity to your on-premises environment and, if you leverage a subdomain of vmwarehorizon.com, no concerns about DNS records or expired certificates. All these typical primary protocol concerns are offloaded to Universal Broker. If the user is failing to see their entitlements most likely they're fat fingering their password or just failing to have access to the internet. By shifting the primary protocol exchange to the cloud a lot of the nittier grittier troubleshooting for remote connectivity is circumvented or at least simplified.

The Horizon secondary protocol, the display protocol, is most relevant and impactful when it comes to user experience. Fortunately, the network path traversed by this protocol is established post authentication based on Universal Broker's assessment. An optimum Horizon Pod is judiciously selected based on, "insider information," regarding the status and configuration of the Horizon environment and entitlements. This leads to global load balancing that's better informed by the Horizon solution, providing a tighter integration than normally achievable. It's easy to appreciate this improvement when you consider an esoteric pitfall of the older traditional GSLB/CPA model: Horizon protocol traffic hair-pinning.

Horizon Protocol Traffic Hair-Pinning - Ouch!

For over a decade now VMware has offered a fully redundant Horizon architecture for customers who need a bullet proof, highly available Horizon deployment. It used to be referred to as, "AlwaysOn Point Of Care," in homage to the healthcare customers that were particularly fond of the solution. Nowadays, it's consider just plain redundancy, or a Horizon Multi-Site architecture. Prior to Universal Broker, an absolute requirement for this architecture was some combination of a 3rd party global load balancer and Cloud Pod Architecture (CPA). The GSLB solution provides a single name space for multiple sites, while CPA replicates entitlements, resource status and current session information between the separate Horizon Pods, providing minimal integration between otherwise fully redundant and independent Horizon environments. This ensures fluid failover and fall back in response to disruptions and outages, while also ensuring folks get properly routed according to home site preferences or pre-existing sessions.

This model has been good enough to make it throughout the last decade but has a couple caveats less than ideal for traditional on-premises deployments and complete deal breakers for certain types of cloud based deployments. First off, it's predicated on network connectivity and east-west traffic between Horizon Pods, a requirement for replicating entitlements, resource availability and session status across separate environments. It's a potential challenge when you consider these Pods could be very far away from each other or on different clouds.

While CPA traffic isn't too extensive and is typically manageable, the need for east-west traffic between sites can really spike when remote connections through UAG appliances are in the mix and global load balancing isn't executed flawlessly. This ties back to UAG and how it handles the Horizon protocol. Traditionally you must have both the primary and secondary Horizon protocol traffic go through the same UAG appliance. In fact, outside of Universal Broker deployments, it's an absolute requirement. UAG's prime directive is to ensure all display protocol traffic passed is on behalf of a strongly authenticated user. To achieve this it only passes secondary protocol traffic for sessions its handled primary protocol traffic for. There's no way for UAG to communicate authenticity of a Horizon user to other UAGs. So, under normal circumstances, the same UAG that handles primary protocol traffic must handle the secondary protocol traffic or the session will otherwise break. This is by design. Now, this requirement for UAG can have some rough consequences in the context of a traditional Horizon multi-site architecture. If the 3rd party global load balancer doesn't do a flawless job getting folks routed to the proper Pod, there's potential for an inefficiency referred to as Horizon protocol traffic hair-pinning.

For example, say an organization has two PODs, one in New York and one in Los Angeles. Then a jet setting banker working for that organization connects to his virtual desktop from a Manhattan penthouse. Accordingly, the GSLB routes him to the Horizon POD in New York. He disconnects from his virtual desktop, kisses his wife and kids good bye, then jumps on a private jet with his mistress for a weekend getaway to the Grand Teton National park in Wyoming. When he reaches the hotel room in Wyoming he remembers, "oh shoot I forgot a quick thing for work," then connects to his Horizon environment. The global load balancer sees he's in Wyoming and routes him to the closer California Pod. He hits the Horizon Connection server in California and through CPA the Connection Server is aware of the banker's currently open session in New York. It routes him back to that currently open session. Great, except, because he's initially connected the California Pod through a UAG appliance in California, he has to continue using that UAG appliance. So his traffic has to go from Wyoming to California, then back across the US from the UAG appliance in California to the Horizon Pod in New York. An extreme but perfect example of Horizon protocol hair-pinning. Not only could it make for a lousy user experience, but it could lead to an excessive amount of east-west traffic beyond what's been planed for.

This example is extreme, but by no means outside the world of possibilities. There's certainly ways you could fine tune the coordination of your 3rd party global load balancer and Horizon environments to mitigate this challenge. (For instance I've heard F5 has an APM module that can more accurately route a user to a Pod where they already have an established session.) However, I don't think creating a GSLB/CPA solution that's bullet proof is a cake walk and that's why this challenge is called out in the Tech Zone article, Providing Disaster Recovery for VMware Horizon. If you don't get things just right the impact could be fairly brutal on your user experience and networks, possibly in the middle of an outage, when hair-pinning challenges are the last thing you need. Potential for particularly acute challenges arise when considering cloud hosted desktops. Both the AWS and GCVE guides warn against this potential hair-pinning. In these scenarios, hair-pinning has the potential to saturate capacity on NSX gateways and kill session density. Further, it could lead to some expensive and senseless traffic flow between on-premises and cloud environments. Accordingly, it's recommended to leverage Universal Broker instead of CPA for these Horizon 8 based cloud deployments.

Despite working with Unified Access Gateway for over half a decade now I was completely blind sided by this esoteric gotcha. It took awhile for this challenge to sync in, but when it did, oh boy, did it! Fortunately, we can completely side step this potential pitfall by adopting Universal Broker. With Universal Broker no traffic hits a UAG appliance until after there's been a successful authentication against the cloud and an ideal path has been determined. So, with the adoption of Universal Broker we avoid this esoteric, but real, pitfall with on-premises Horizon environments while laying the ground work for successful multi-site adoption with cloud hosted virtual desktops. Speaking of cloud hosted desktops lets talk about Universal Broker and it's role in the next-gen Horizon Control Plane for Horizon on Azure.

Universal Broker And The next-gen Horizon Control Plane (Titan)

For the next-gen Horizon Control Plane, currently limited to Horizon on Azure, Universal Broker is an essential built-in component. It plays a critical role in the transformation to a Horizon thin edge, helping eliminate the need to deploy Horizon Connection servers within Azure. Instead, there's a light weight deployment of a thin Horizon Edge on top of native Azure, consisting of only UAG appliances and Horizon Edge Gateways. The rest of the traditional infrastructure used to manage the Horizon environment is shifted to the next-gen Horizon Control Plane. This reduces consumption of Azure capacity while simplifying the deployment and maintenance of Horizon.

Again, with this next-gen architecture Universal Broker is an absolute requirement, an integral part of this new model. So much so, it doesn't even get specifically called out in the next-gen reference architecture or official documentation. It's functionality requires no extra configuration and is assumed available as entitlements are created. While this new, stealthier, iteration of Universal Broker is certainly easier to deploy it's only available with the next-gen Horizon Control Plane, so its limited to Horizon on Azure for now. For those leveraging Horizon 8 deployments on-premises or on top of various SDDC public clouds - AVS, GCVE, AWS - v1 of Universal Broker is still relevant. At Explore Europe 2022 VMware announced intent on extending next-gen architecture to Horizon 8, but it's limited in scope as of today and there's no committed time line for extending it's newer iteration of Universal Broker to Horizon 8. In the mean time there's the traditional Universal Broker deployment that's been available for sometime now and, while it's not as easy to deploy as v2, it's not rocket science.

Setting Up Universal Broker With My On-premises Lab

Since the setup of Universal Broker for Horizon 8 is well documented I'm just going to provide a high level overview, call out some specific challenges, and include links to relevant documentation for those who want to get into the nitty gritty. The official documentation calls out four major steps for setting up Universal Broker for Horizon 8 environments. Assuming you already have a current version of Horizon Cloud Connector up and running, the next steps are:

- Installing the Universal Broker Plugin on all Connection Servers

- Configuring your UAG appliances with the required JWT settings

- Enabling Universal Broker in your Horizon Universal Console

- Configuring multi-cloud entitlements within the Universal Console

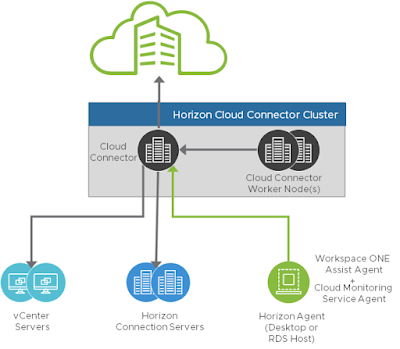

Again, these steps are well documented in both the official documentation for Horizon Cloud Control Plane and a really nifty Tech Zone article called, "Configuring Universal Broker For Horizon." Below is an excellent graphical representation of the architecture.

While in hindsight the configuration of UAG was relatively straightforward I personally struggled with it. The relevant settings are configurable through the web interface for the UAG appliance by navigating to advanced settings and clicking the gear box for JWT. All the settings to input are in regard to the supported Horizon Pod itself and are specially detailed here in the documentation. First off there's the cluster name of the Horizon Pod. Unless you've bee supporting CPA, you've probably never been aware of this property. Its case sensitive so use caution. (I had challenges with this portion of the setup because CPA had been enabled for my Pod in the past, though it currently wasn't in use. That had the affect of changing the name of the cluster that was displayed versus what was needed for the UAG setting.)

Another setting I initially struggled with was the Dynamic Public Key URL. This essentially amounts to appending "/broker/publicKey/protocolredirection" to the internal FQDN of your Horizon Pod. (I would think it's always going to be the same FQDN used for your, "Connection Server URL," under Horizon Edge settings.) Likewise, the, "Public key URL thumbprints," is the certificate thumbprint used by the FQDN leveraged for the Dynamic Public Key URL. (Again, probably the same as your Connection Server URL Thumbprint under Horizon Edge settings.). So, overall, the values are fairly similar to values you you're using already for your UAG's Horizon settings, but they have weird fancy names that can throw you off. For context, here's the settings I typically use for my Horizon Edge settings:

And here's the JWT settings for Universal Broker:

In hindsight it all makes sense enough but in the context of setting up a new solution it was a bit confusing at first. Another gotcha I bumped into was the need to define your desktop pools as, "Cloud Managed," when initially creating them, prior to creating your multi-cloud assignments within the Universal Console. Fortunately, once you know to do this, the procedure is simple enough. As you're walking through the desktop pool creation wizard check the box for, "Cloud Managed," and you're good to go.

Again, there's a great article in Tech Zone that covers the the setup of Universal Broker called, "Configuring Universal Broker For Horizon." I'm sorry to say I didn't learn about this articles existence till I was almost completely done with the setup. Instead, I slogged through the entire setup using the official documentation, "Administration of Your Horizon Cloud Tenant Environment and Your Fleet of Onboarded PODs." It was doable, just not the smooth and enjoyable guidance of a well put together Tech Zone article.

Other portions of the setup were fairly straight forward. For instance, installing the Universal Broker Plugin was just a matter of locating the right version for my Connection Servers, accepting defaults, and going next-next-finish. Configuring the Broker service in the Universal Console was easy and straight forward as well, particularly because I choose to go with a subdomain of vmwarehorizon.com, rather than a customer provided FQDN. This avoids the need to generate any SSL certs or create any external DNS records, which I found to be a lovely convenience. (Otherwise, you can go with the customer provided option, then enter in your own FQDN and provide a cert.).

With the plug-ins installed on your Connection Servers, UAG's properly configured and Broker enabled, you're ready to start creating your multi-cloud entitlements from the Universal Console. It's a relatively straight forward process so I'll leave that to the official documentation.

One final thing I'd like to point out is that you can configure UAG's to support Universal Broker, without disrupting their use for traditional UAG access to Horizon environments. So folks can continue to hit these UAG devices directly for access to traditional Horizon pools while in parallel they can support access through Universal Broker. Further, while the local pools used for Universal Broker multi-cloud entitlements are configured from Universal Cloud, the entitlements made from the cloud trickle down to the local pools so these local pools are also accessible through direct UAG connections.

Current Limitations Of Universal Broker

While Universal Broker presents some interesting innovation and a compelling future there's definitely some limitations. Most notably, Universal Broker v1 doesn't support application pools across multiple Pods. You can deliver a single Pod based application pool but you're not going to get load balancing for application pools across multiple Pods. So, as far v1 of Universal Broker is concerned, there's isn't parity between application pools and virtual desktop pools. Another limitation as of today is no support for mixing Horizon 8 based Pods and Horizon Cloud based Pods. (Horizon on Azure.) So, for example, you can't have a multi-cloud entitlement that spans across an on-premises Horizon 8 Pod and a Horizon on Azure environment. However, you could have a multi-cloud assignment that spans across an on-premises Horizon 8 Pod and Horizon 8 Pod running on top of AWS, GCVE or AVS. It comes down to whether your deployment use traditional Horizon 8 Connection Servers or not. If they do then they can share multi-cloud entitlements with one another. (Assuming they're not application pools.)

There's also challenges regarding support for stronger forms of authentication. Leveraging the built in capabilities of UAG, there's support for RADIUS and RSA. However, there's no support for smart cards or certificate auth. Further, there isn't support for direct SAML integrations between UAGs and 3rd party IDPs, one of the fastest growing methods for strong authentication within the DMZ through UAG. So no support for direct integrations with IDPs like Okta, Ping or Azure. That said, there is support for Workspace ONE Access, which in turn can be integrated with this 3rd party solutions. So Workspace ONE Access can be configured as a trusted IDP for Universal Broker, which in turn can leverage 3rd party solutions that have been configured as trusted IDPs for WS1 Access. (Kind of like the good old days before UAG started supporting direct integrations.).

The integration of Universal Broker with WS1 Access makes for interesting discussion because there's a lot of confusion about the ability to replace the solutions with each other. While there's some slightly overlapping capabilities in the two products, by and large they are complementary solutions with very different competencies. Sure, Workspace ONE Access is a way to provide users with a single URL for access to multiple Horizon PODs, but the solution is squarely focused on identity and wrapping modern authentication around Horizon access. It's by no means a global load balancing solution. Conversely, while Universal Broker can support strong authentication through RADIUS and RSA, its core competency is providing global load balancing based on shortest network path, assignments and current Horizon environment status. So, when you focus on the core competencies of each of these solutions, what they're really good at, combing the technologies is possibility worthy of consideration. For a great overview of this integration, check out the section, "Workspace ONE Access And Horizon Integration," with the Tech Zone article, Platform Integration. Below is a great diagram of the integration.

However, there's an important caveat to be aware of. With this WS1 Access integration, as it stands today, there isn't an option to configure unique WS1 Access policies for the multi-cloud entitlements supported by Universal Broker. Buried in the documentation, under a section called, "Horizon Cloud - Known Limitations," it states, "access policies set in Workspace ONE Access do not apply to applications and desktops from a Horizon Cloud environment that has Universal Broker enabled." Instead, all the Universal Broker entitlements are protected by the default access policy of WS1 Access. Depending on your deployment this may or may not be a deal breaker. If you're using WS1 primarily for your Horizon deployment, and all your entitlements have the same access policy requirements, then having them all share the same default access policy could be feasible. However, if you need granularity in terms of your WS1 Access policies for these multi-pod assignments, say stricter requirements for some specific pools than others, this could be a problem. Or if you have a fairly mature WS1 Access deployment and want looser requirements for initial portal access it could be a challenge. For more granular WS1 Access policies to use for your desktop you need to fall back to Virtual App Collections which, unfortunately, are incompatible with Universal Broker.

The Trajectory Of Universal Broker And next-gen Horizon Control Plane

As called out earlier in this post, VMware recently announced plans to extend the next-gen Horizon Control Plane to Horizon 8 environments for better support of hybrid deployments across on-prem and Azure. Given Universal Broker is transparent and built into the next-gen Control Plane, extending this new control plane architecture to Horizon 8 shows a lot of promise for addressing the limitations of Universal Broker v1 as of today. Right off the bat, the next-gen Horizon Control Plane supports application pool entitlements across multiple Pods, addressing a long standing limitation. Further, extending support to Horizon 8 certainly implies that challenges with multi-cloud entitlements across Horizon and Horizon Cloud PODs will be addressed. Finally, there certainly appears to be commitment to ironing out challenges combining Universal Broker functionality with Workspace ONE Access. With this next-gen architecture, use of an IDP is no only supported, but is an absolute requirement. As of today there's a choice between WS1 Access or Azure.

Though there's no fixed promises made by VMware as of today, with this next-gen Horizon Control Plane and Universal Broker there's a clear trajectory towards addressing a lot of todays challenges.

Conclusion

While there's work to be done and gaps to bridge I'm still incredibly excited about the Universal Broker technology and think every Horizon admin should at least be familiar with it. In some ways it reminds me of Instant Clones circa 2016 or UAG in 2015, back when it was called, "Access Point." Both these solutions seemed a little crackpot or science project-ish at the time. They weren't quite ready yet, not done baking till... they just were. Though we definitely had our reasons for being suspect or dubious upon their initial release these solutions eventually rounded the corner and established themselves as standard technologies, core to the Horizon stack. I think the case will be the same for Universal Broker simply because it has a lot going for it. First and foremost, it's not a solution looking for a problem, but rather a purpose built solution for addressing a Horizon specific requirement. More notably, the way it solves this challenge from the cloud makes it both clever and easy to deploy, while lending Horizon admins a greater degree of autonomy. Eventually, its adoption will become the path of least resistance. I wouldn't necessarily implore admins to rip out their current working implementations of CPA\GSLBs and slam this technology in. However, as new multi-site implementations get stood up I think the customer base will slowly migrate over to this new solution. By the time it becomes a new standard we probably wont even call it Universal Broker anymore. It will just be multi-cloud assignments through the Horizon Control Plane, something we take for granted.